Enterprise LLM Guardrails

As LLMs become mission-critical to business operations, ensuring they operate safely and responsibly is paramount. Our AI Gateway offers multiple layers of guardrails against prompt injections, hallucinations, unsafe content, and data leakagekeeping your AI deployments secure and compliant with industry regulations.

Protect your AI applications with enterprise-grade guardrails that prevent misuse, ensure compliance, and maintain data privacy.

Our comprehensive LLM Guardrails Platform provides multi-layered protection against prompt injections, hallucinations, and unsafe content.

The LLM Safety Challenge

Without robust guardrails, LLM applications pose significant risks to enterprises:

Critical Security Risks

- Prompt Injections: Attackers can manipulate LLMs to bypass restrictions or extract sensitive data

- Hallucinations: LLMs can generate false, misleading, or harmful information

- PII Exposure: Customer data and sensitive information may be leaked in responses

- Content Moderation Failures: Inappropriate or harmful content generation can damage reputation

- Compliance Violations: Industry regulations and privacy laws require controlled AI use

Protection Requirements

- Real-time Validation: Immediate checks on all LLM inputs and outputs

- Multi-layer Security: Defense in depth with multiple validation methods

- Automated Enforcement: Consistent policy application across all AI interactions

- Comprehensive Monitoring: Full visibility into AI system behavior

- Audit Trail: Detailed logging of all validation decisions

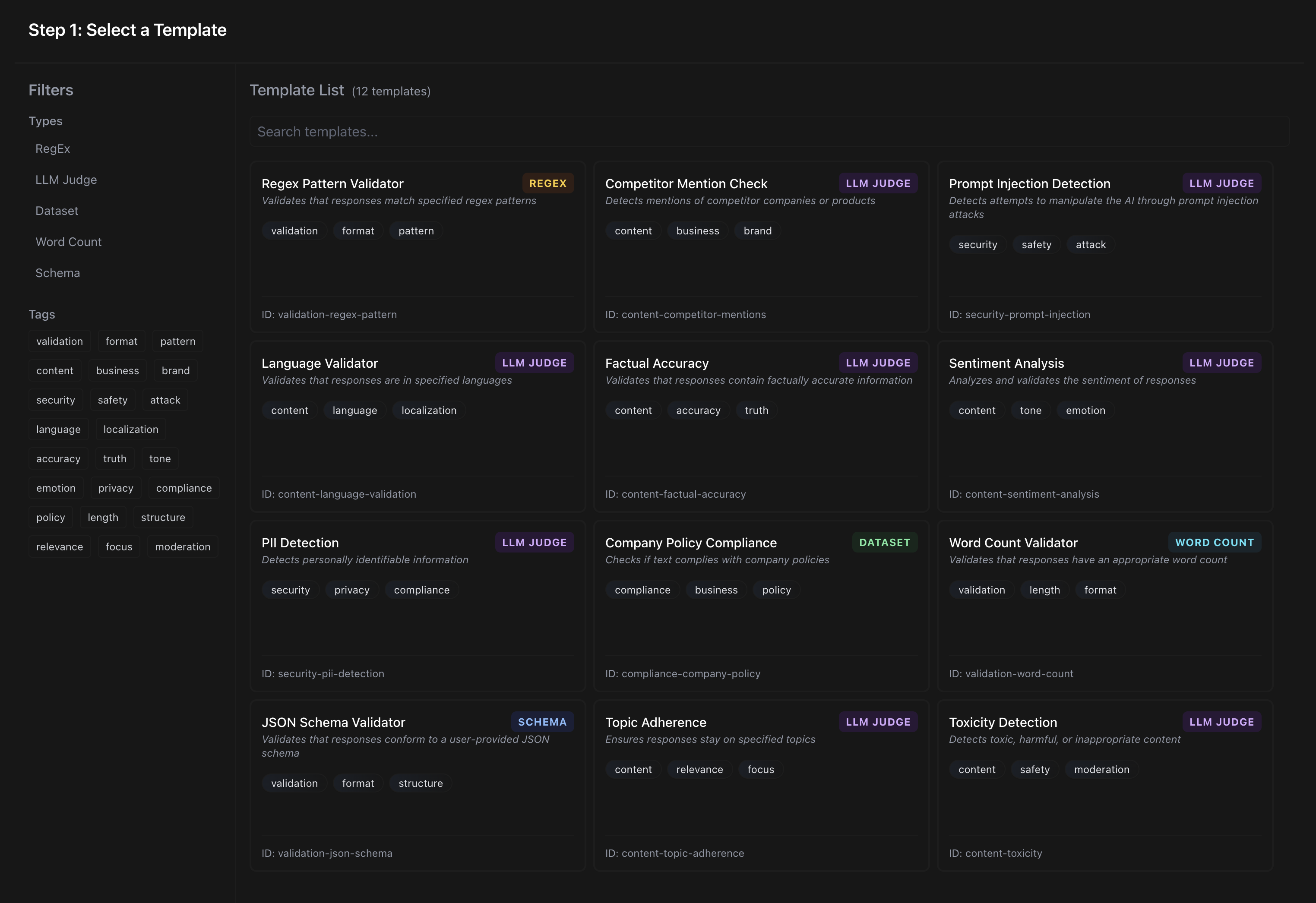

Four-Layer Guardrail Architecture

Our comprehensive guardrail system combines multiple validation approaches to ensure maximum protection and accuracy.

Deterministic Guardrails

Rule-based validation using predefined patterns and schemas for immediate, reliable checks.

- •JSON Schema: Enforce strict response structure and data types

- •Regex Validation: Pattern matching for specific formats (emails, dates, etc.)

- •Token Limits: Control response length and complexity

- •PII Detection: Block sensitive data using pattern matching

LLM as Judge

Leverage specialized LLMs to evaluate content quality, safety, and compliance.

- •Content Safety: Detect toxic, harmful, or inappropriate content

- •Prompt Injection: Identify and block malicious prompt manipulation

- •Policy Compliance: Ensure adherence to company guidelines

- •Factual Verification: Cross-check responses for accuracy

Dataset-Based Validation

Compare responses against known datasets using vector similarity search.

- •Attack Detection: Match against known attack patterns

- •Content Fingerprinting: Compare with approved content templates

- •Reference Data: Validate against trusted information sources

- •Dynamic Learning: Continuously update validation patterns

Partner Integrations

Enhance protection with specialized third-party security services.

- •OpenAI Moderation: Built-in content filtering

- •Azure Content Safety: Enterprise-grade content analysis

- •Industry Solutions: Domain-specific security tools

- •Custom Integration: Connect your security infrastructure

Ready to Secure Your AI Applications?

LangDB's guardrails platform provides the protection you need to deploy AI with confidence. Our comprehensive approach ensures your AI systems operate safely, responsibly, and in compliance with your organization's requirements.

Resources

Explore our comprehensive documentation to learn more about implementing LLM guardrails.