Tracing

LLM applications leverage increasingly complicated abstractions, including chained models, tools, and advanced prompts. LangDB's nested traces assist in understanding what is going on and determining the root cause of problems.

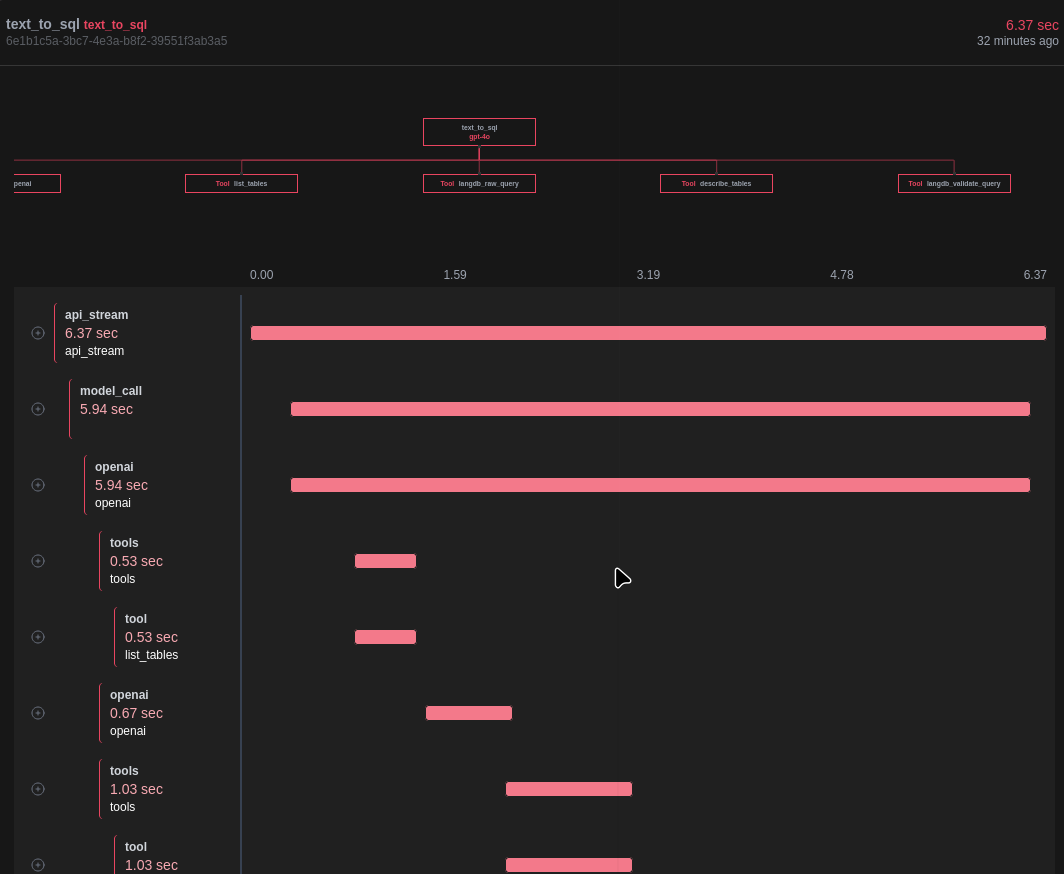

Trace in LangDB

A trace in LangDB in divided into various spans, which can be expanded to give more information about the specificed operation.

Spans

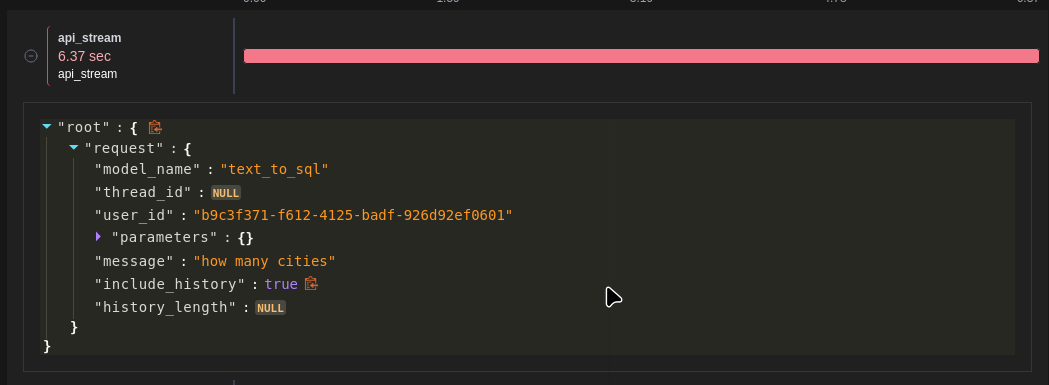

API Stream

When we execute a SQL query we get a api_stream span. It contains the details of the query executed.

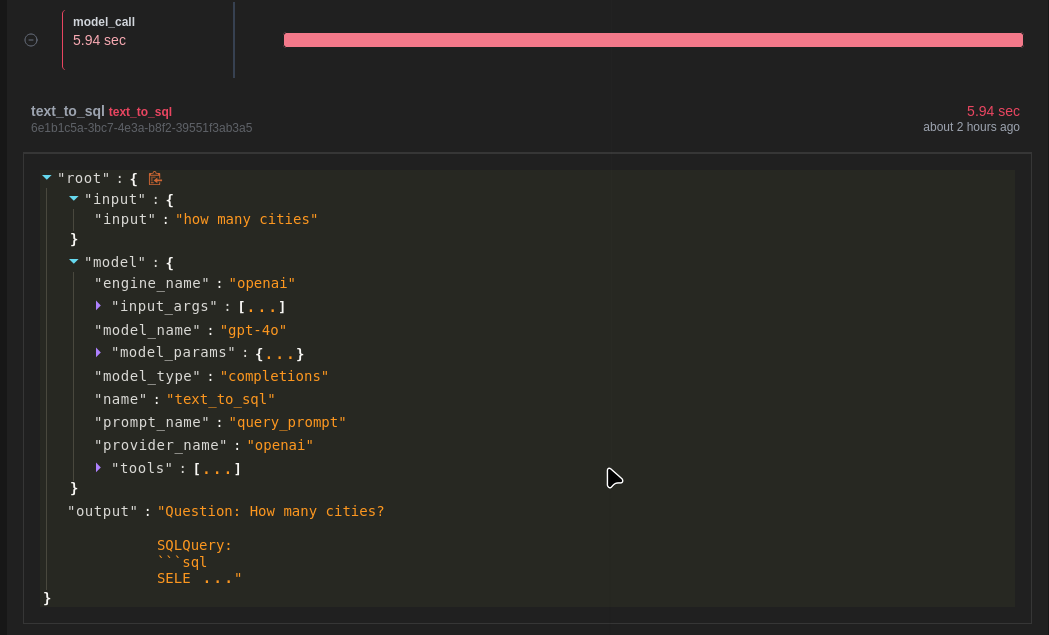

Model Call

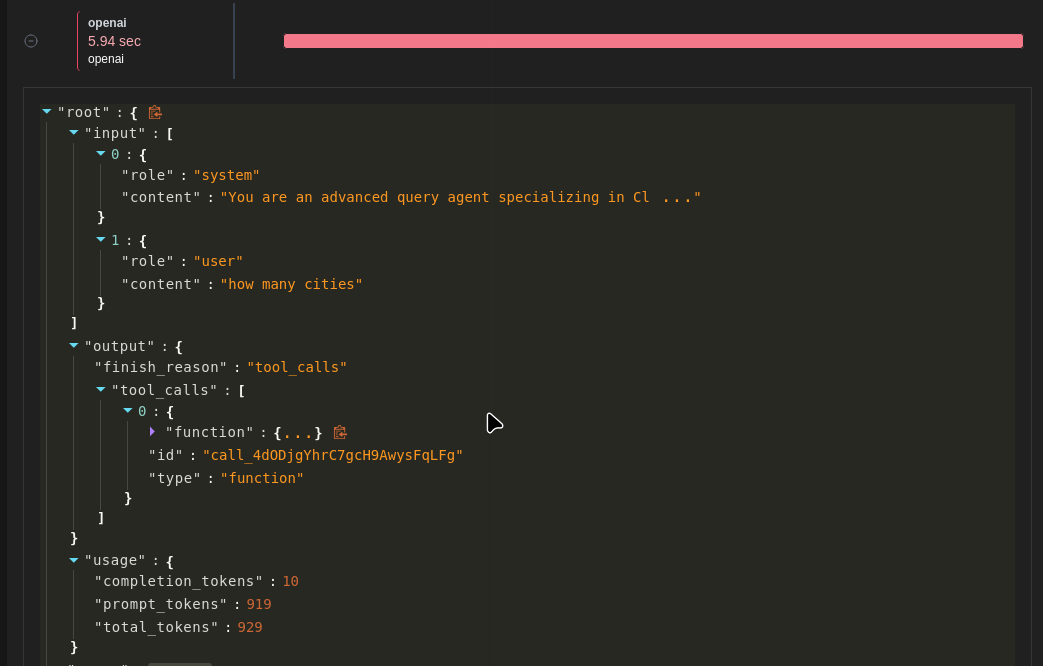

We get model_call span, which contains the exact api call being sent to the LLM provider, in this case OpenAI.

Intermediate Model Response

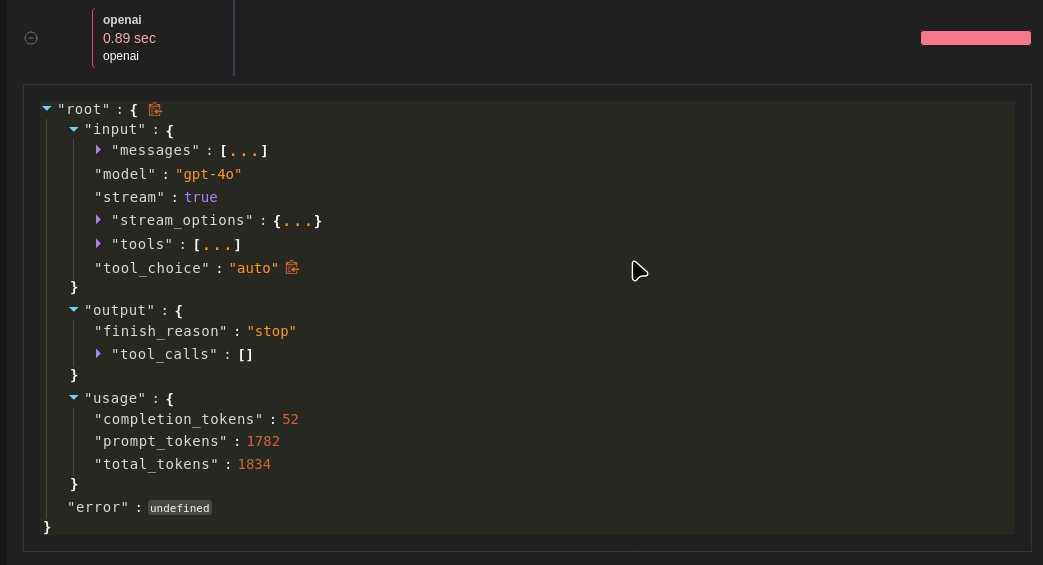

After making the call to OpenAI, we get a reponse from the provider, which is recorded in the provider span, it is openai here.

In the model response, we could see the that the model has decided to use one of the tools attached.

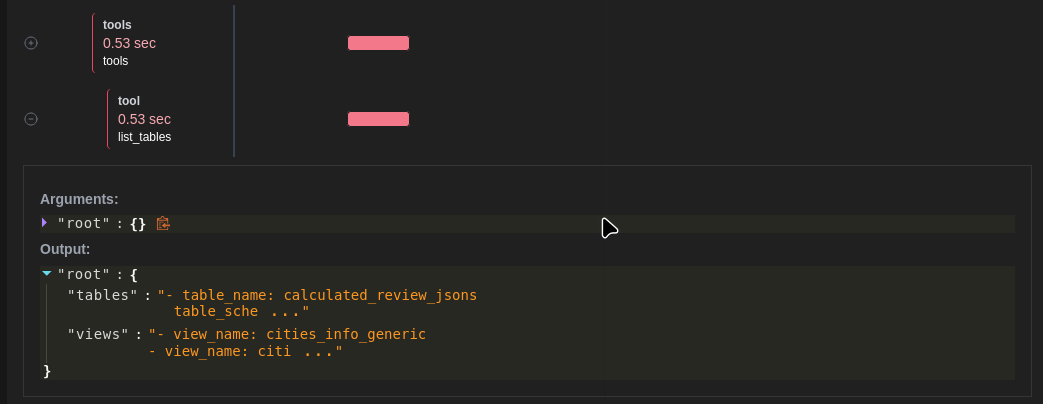

Tool Call

tool span contains the information about the tool executed by the model. It has input as well as output of the specified tool. In this case, list_tables from Static Tools was executed.

Final Model Response

At the end of the chain of api calls, we have a provider span again. This includes all the messages, tool calls and token usage from start to finish.